Design principles for research & development performance measurement systems: a systematic literature review

André Ribeiro de Oliveira

andre.ribeiro@eng.uerj.br

State University of Rio de Janeiro – UERJ, Rio de Janeiro, Rio de Janeiro, Brazil.

Adriano Proença

adriano@poli.ufrj.br

Federal University of Rio de Janeiro – UFRJ, Rio de Janeiro, Rio de Janeiro, Brazil.

ABSTRACT

Goal: This paper aims to set the foundation on which a performance measurement system for the R&D function will be developed, assuming it not only as an organizational division, but also as a comprehensive process in order to better evaluate its efficiency and effectiveness.

Design/Methodology/Approach: This paper adopts a Design Science Research strategy as its methodological basis. It focuses on bringing guidelines from the body of organizational theory of knowledge to design effective management solutions for an R&D performance measurement system.

Results: A set of well-defined Design Principles to guide performance measurement system designs for R&D management.

Limitations of the investigation: Even though the design principles have been systematically obtained from the literature review, there was no adequacy test of these principles in practical cases, which should be conducted in future research.

Practical Implications: Managers and leaders of R&D teams may use theory-grounded guidelines to design their performance systems and to adjust such systems to their specific needs.

Originality/ Value: The main practical contribution of the study findings is to provide a comprehensive set of guidelines to design a R&D Performance Measurement System, rather than proposing another new complete theoretical framework to add to the literature.

Keywords: Performance Measurement Systems; R&D Management; Literature Review; Design Principles; Design Science Research

1 INTRODUCTION

Innovation has been widely recognized as a core strategic approach for sustaining a competitive advantage in the market as well as a key to the development of any nation. Research findings have long confirmed that innovative firms outperform their non-innovative competitors in market share and in profitability in the long term (Boston Consulting Group, 2006).

Performance Measurement Systems (PMS) are among the most important management tools, and they certainly are among of the most challenging (Neely, 1999). Those are some characteristics of Research and Development (R&D) management - (i) the relevance of intangible elements such as knowledge, creativity, and motivation; (ii) uncertainty in their business processes (concerning timing, budget, human resource commitment, etc.); and (iii) the unpredictability of actual results—makes designing a PMS for R&D a particularly complex managerial process (Kerssens-van Drongelen et al., 2000; Tidd et al., 2005).

Managers have difficulties in designing PMS to effectively support their decision-making process in such context. Therefore, there is little consensus among academics and practitioners on how R&D performance measurement should be carried out (Jensen and Webster, 2009).

In first-and-second generations of R&D organization, firms often adopted a limited set of input-output key performance indicators, such as number of patents, expenditure with R&D, and others (Rothwell, 1994). But the world has changed, and firms are now headed toward 5th generation R&D management systems. In the current competitive environment, designing a PMS for R&D has become an even more complex endeavor.

This paper sets the foundation upon which an approach to design a PMS for the R&D activity can be developed, addressing it not only as a functional division, but also as a comprehensive process, in order to better evaluate its overall effectiveness. It also assumes the current processes as being organized under the general concept of the fourth-and-fifth generation of R&D management systems (Rothwell, 1994). Such concept leads to a specific approach for developing performance evaluation systems that is different from those already established in the literature.

However, instead of approaching this issue by proposing a new conceptual framework to support performance measurement, this paper focuses on a more flexible and adaptable procedure, which involves defining Design Principles for such systems through a Design Science Research strategy. Firstly, it sets the context and discusses the difficulties of measuring the effectiveness of R&D and its inherent innovation drive. The second section presents the methodological approach, which supports the research, the design science research strategy. Then, it presents a synthesis of the conceptual frameworks identified in the literature and the design principles that may guide the design of PMSs. After that, the usage of such design principles is discussed. Finally, the conclusions and emerging guidelines are presented, as well as suggestions for future developments.

2 CHALLENGES FOR PERFORMANCE MEASUREMENT SYSTEMS IN R&D

Rothwell (1994) defines first-generation R&D systems as those that assumed as their mission to provide a “technology push” to move the firm, considering that a successful R&D would be the one that led to “more successful new products out.” Second-generation R&D systems, or “need-pull systems”, were developed as solutions for the rationalization of technological change efforts through bringing R&D activities more efficiently close to market demands.

First-generation R&D systems demand a linear logic for the innovation process metrics, focusing on inputs such as R&D investment, education expenditure, capital expenditure, research personnel, university graduates, technological intensity, etc.

Second-generation R&D systems complemented input indicators by accounting for the intermediate output of commercialization activities. Typical examples include patent counts, scientific publications, counts of new products and processes, high-tech trade, etc. (Milbergs and Vonortas, 2005).

Third-generation R&D systems were modeled assuming a portfolio of wide-ranging and systematic projects, eventually covering different sectors and interfirm activities, combining technology-push and need-pull models of innovation through interactive activities (Rothwell, 1994). The archetypal third-generation R&D management system brings with it a richer set of innovation indicators and indexes, based on surveys and integration of publicly available data, such as presented in Global Innovation Index, OECD (specifically, in the Frascati Manual), the analysis of National Innovation Systems, and others. The primary focus of these indicators is on benchmarking and rank ordering a nation’s capacity to innovate; however, some of these key performance indicators have been adopted at firm level as well.

The newest R&D management system generations (i.e. fourth-and-fifth generations) are the ones where one assumes R&D activities as part of an integrated innovation process, in which different teams of R&D work simultaneously, establishing stronger links with primary suppliers, keeping horizontal collaboration (such as "joint ventures" and strategic alliances), and meeting several demands and different customers in the market (Rothwell, 1994).

Metrics and PMSs that have been proposed in the literature so far have been seemingly unable to cope with these new R&D approaches. Some multilevel frameworks such as the ones seen in Tidd et al. (2005) and Hansen and Birkinshaw (2007) suggest a broader concept of innovation management. However, Davila et al. (2006) note that firms cannot summarize evaluation in a set of input-output measurements or even consider a few managerial aspects of innovation. The issue is whether there would be a theoretical framework to support a well-formulated set of key performance indicators to measure R&D and organizational innovation.

Some works in the literature are prominent candidates to meet the fourth-and-five generation system demands, such as Chiesa and Frattini (2009) and Ojanen and Vuola (2006). These frameworks, however, while superior to the input and output indicators of the first-and second-generation R&D, are not sufficient to cope with the complexity of a comprehensive innovative organization.

Assessing R&D and innovation leads to the discussion of innovative structures designed to improve the efficiency and effectiveness of their related processes. Such structures are complex and specific; as a result, they require adaptations in their design. A firmly based set of guidelines to design R&D management systems, such as the one presented by Neely (1998) and Neely et al. (2000), must allow for a design that is coherent and aligned to the firm’s strategy and its overall innovation management system (Chiesa et al., 2007).

In such context, this paper proposes a set of Design Principles in order to orient a PMS to R&D activities. It adopts a Design Science Research strategy (Romme and Endenburg, 2006; Dresch et al., 2013) as its methodological basis to accomplish this goal. Considering the aim is to get closer to an effective artifact to manage the R&D function, this research strategy is the one that leads to a more robust solution.

3 A DESIGN SCIENCE APPROACH FOR DESIGNING A PERFORMANCE MEASUREMENT SYSTEM FOR R&D

While the “natural (and social) sciences” seek laws that portray the hard core of a given field of knowledge, explaining and predicting the behavior of nature or society, the “design sciences” aim to establish standards, which explain and predict the results of certain defined actions (architectures, procedures, protocols, etc.): they are “sciences of the artificial” (Simon, 1969).

Bunge (1967) named these standards “technological rules”. Such rules prescribe a course of action, indicating how someone should proceed, according to a finite set of actions to achieve certain goal. Technological rules were established years later by Van Aken (2004) as a convenient sample of general knowledge, relating an intervention or an artifact with a desired outcome or performance in a specific field of application.

More recently, Van Aken himself asked for “design propositions” to be used as a more accurate definition for such statements. A "design proposition" comprises a formulation such as: for this problem, within this context, it is useful and effective to implement this technical solution, which, through the mechanisms x and y, will lead to this outcome - assuming this is a satisfactory solution, not necessarily an optimal one (Van Aken, 2013).

In fact, providing reliable, scientifically tested, and validated design propositions in management—conceptually as expressed for example in theories, models, or frameworks and empirically validated—with tangible or intangible artifacts that can be implemented is the objective of this design science research strategy.

Romme and Endenburg (2006) proposed a sequential model of stages, from “natural/social (descriptive/analytical) science” to the actual design of working solutions in the field, in order to guide design researches and developers. Steps were necessary, for them, to transform organizational theory knowledge into useful artifacts (ie. management solutions), in order to establish effective solutions in organizational design. As a transitional phase in this process, before reaching the design proposition stage, they identified the need for “construct principles” or “design principles”. They are understood as a set of binding propositions (“if A, then B”) based on advanced organizational theory in order to support the development of new organizational solutions or the redesigning of the existing ones. Some researchers in the organizational field have adopted such perspective as means to advance design knowledge in their fields, such as Salerno (2009), Oliveira (2010) and Gawer and Cusumano (2014).

This study seeks to establish such design principles as a cornerstone on which to design a PMS for R&D activities within the context of the challenges of contemporary innovation management. To introduce a set of design principles, it is imperative to begin with a systematic literature review in order to be able to identify the state of the art in the field.

4 SYSTEMATIC LITERATURE REVIEW

Literature reviews can examine old theories and propose new ones; they can provide guidance to researchers’ planning future studies; and can also be used to methodically examine the reasons why different studies addressing the same question sometimes reach different conclusions (Petticrew and Roberts, 2006). A systematic literature review may be based on a quantitative bibliometric study with content analysis, such as can be viewed on Lopes et al. (2016) and Pereira et al. (2018). However, this paper does not present a bibliometric analysis. Alternatively, it focuses on the qualitative content analysis in order to support the identification of guiding principles. It is based on a broad literature review that was performed in two stages: at the first stage, a set of key terms was used as input for the searches through the main database engine. At the second stage, titles and abstracts of the papers identified at the first stage were read in order to select the main articles, which were subsequently studied and analyzed.

First stage: searching in academic databases

A first stage was adopted to link the main publications related to the central theme presented in this work. Firstly, the search engine presented in Web of Science – Principal Collection (Clarivate Analytics) was used, accessing both Science Citation Index Expanded and Social Sciences Citation Index database, and inserting the most appropriate key terms in the Title field. The time span chosen for the journals has been suggested by Edison et al. (2013), which is the period between 1949 and 2016, coinciding with the introduction and early popularization of the innovation concept by J. Schumpeter in the US in 1942. Some filters by general category were used in order to limit their scope, such as “management” and “business”. English, Spanish, and Portuguese were the languages chosen for this search. The selection consists only of complete articles, therefore excluding book reviews, proceedings, etc.

Some specific queries were defined in order to search publications with a higher precision level around the object. The following search string was used in the “Title” field: (innov* and (perform* or measur* or evaluat* or metric*)) OR (R&D and (perform* or measur* or evaluat* or metric*)) OR ("Research and Develop*" and (perform* or measur* or evaluat* or metric*)) OR ("Research & Develop*" and (perform* or measur* or evaluat* or metric*). 1545 results matched the search query.

Similar search criteria were adopted in reference bases such as Scopus, Engineering Village, and Proquest. These research bases were chosen because they allow intelligent search engines operations, such as wildcards or filters insertion, and selection and exportation functions. Even though each research base mechanism has its particularities, the same search logic was held. For example, in the Engineering Village search engine, it is possible to filter results by controlled vocabulary, allowing a more precise search than that conducted in the Web of Science general categories.

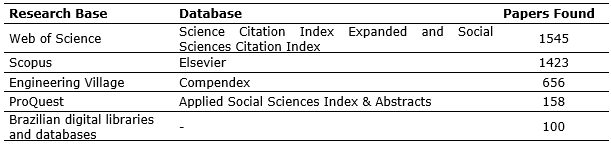

There were also searches in Brazilian digital libraries and databases, such as National Theses Portal (from the service available at Biblioteca Digital de Dissertações e Teses/Instituto Brasileiro em Ciência e Tecnologia - BDTD/IBCT), Scielo Brazil, Revista de Administração da Universidade de São Paulo (RAUSP), and Revista de Administração de Empresas da Fundação Getúlio Vargas (RAE). Chart 1 shows the findings.

Chart 1. Number of papers found in each scientific research base

Source: The authors.

Several articles found in one database appeared in researches in other databases. These findings were consolidated in order to avoid duplicated articles. This consolidation process was supported by a reference management software, Mendeley Database. This tool guided the research bases choice: bases with selection and exportation engines were prioritized.

Second stage: selecting the main articles

Titles and abstracts were read in order to select the main articles to be examined under an analytical approach. Articles were selected from this list, adopting criteria such as:

- Those that clearly identified design principles to design a performance measurement system;

- Those that suggested the adoption of specific performance indicators for R&D;

- Those that suggested the adoption of more general performance indicators, involving the integration of R&D activities with other functions in the organization, such as marketing and production;

- Those that suggested the adoption of innovation management frameworks as the basis for the design of performance measurement systems.

The search included tracking the references presented in the articles, which appeared after the second stage, along with the commonly named "snowball approach." Books, thesis, and dissertations complemented the comprehensive method research.

This process was based on 797 articles, books, theses, and dissertations. The articles effectively used in this work are shown in the references section. They supported identification and definition of the design principles for performance measurement system design for R&D. These principles will be presented in the next section.

5 DESIGN PRINCIPLES FOR R&D ASSESSMENT

Based on the literature review, some guidelines used to design KPIs to R&D were identified. Design principles to establish KPIs within performance measurement systems were identified and highlighted by a “PMs acronyms” in order to further describe their application. Design principles related to specific KPIs to R&D assessment were identified in the literature and several proposals were organized as “KPs” guidelines. More specific design principles related to R&D and innovation structures could be identified in the literature and led to the R&D Process Approaches (“PAs”) and Innovation Management Frameworks (“IMs”). Finally, some design principles could be identified in the literature as models to structure and organize KPIs as management tools (“MDs”).

The following set of Design Principles emerged from the literature, and they may be combined and support different kinds of performance management systems for R&D activities.

Design principles for PMS in general

Key performance measurement refers to the qualitative and/or quantitative information on an examined phenomenon (Franceschini et al., 2007). Complex environments demand a set of performance measures that must be organized in a systemic, articulated, and balanced logic, providing not only information regarding the achievement of objectives, but also the way by which those objectives were fulfilled.

A PMS allows decisions and actions to be taken based on information because it quantifies the efficiency and effectiveness of past actions through appropriate data collection (Neely, 1998).

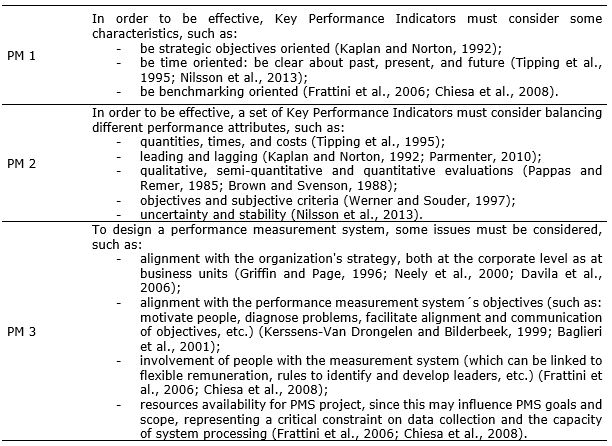

Kaydos (1991) points out some remarkable PMS characteristics, such as mediating the strategy and values communication process, improving the understanding about strategic intentions, and providing alignment between processes and strategic goals’ output and outcome. In this context, some Design Principles were identified (Chart 2).

Chart 2. Design Principles for a general PMS

Source: The authors.

Design principles for specific KPIs to R&D

Historically, some authors have proposed quantitative financial KPIs to evaluate R&D (Galloway, 1971). These quantitative financial KPIs were often based on methods such as Operations Research, Decision Theory, and Econometric Analysis.

A second approach proposes that R&D management must demand a combination of quantitative and qualitative KPIs created by objective and subjective criteria. Some authors have perceived the limitations of the financial approach and have suggested balancing objective and subjective output KPIs—such as number of patents, output quality, and others; and objective and subjective input KPIs—such as employee proficiency, R&D expenses, and others (Moser, 1985).

Both approaches consider R&D as a function (not a process), which is designed to receive a set of well-defined inputs and generate an expected output. They are aligned with first-and-second generation R&D management systems and can be considered a simpler method to evaluate R&D.

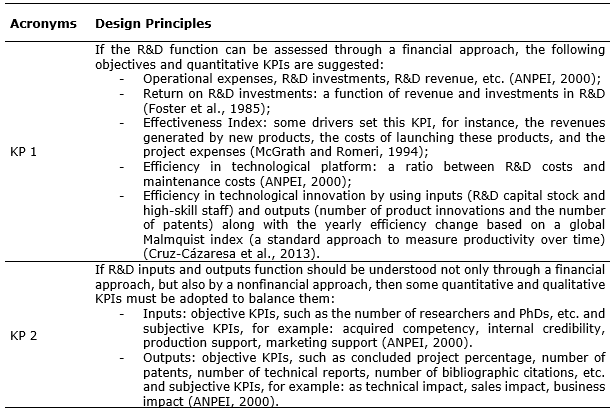

In this context, some design principles that were suggested to develop KPIs to the classic R&D function are shown in Chart 3.

Chart 3. Design principles to create KPIs to R&D function

Source: The authors.

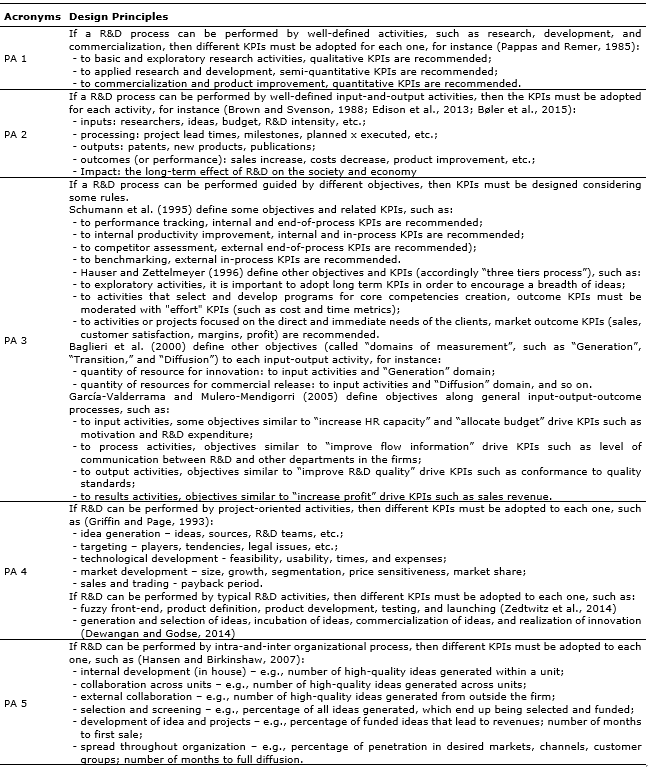

Design principles based on a R&D process approach The process approach is an important framework for PMS design for R&D. Dewangan and Godse (2014) affirm the importance of focusing on innovation processes and that each phase of the innovation process has its particular needs. This is a set of proposals that go beyond the ones that have been seen before, which are focused on evaluating inputs and outputs. The process approach supports the choice of KPIs on the procedural logic of a general R&D model (research, development, and marketing) or a more comprehensive R&D model, according to which managerial processes are performed. Some design principles suggested to support the design of KPIs to R&D processes are shown in Chart 4.

Chart 4. Design principles to create KPIs for R&D processes

Source: The authors.

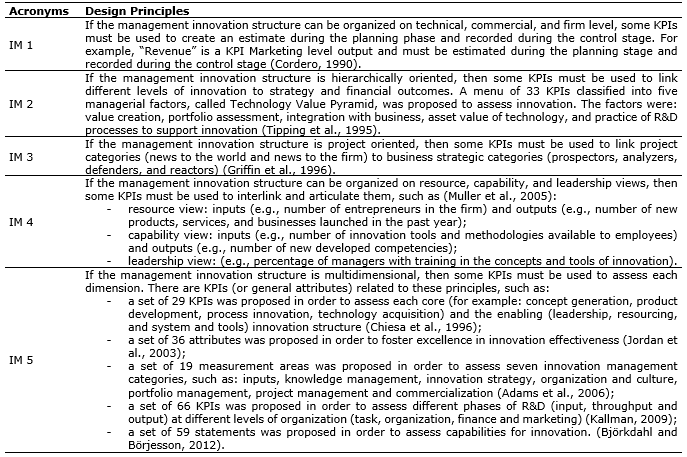

Design principles coming from Innovation Management frameworks

Some innovation management frameworks inspire performance measurement system design in complex environments, such as the ones typically associated with fifth generation R&D systems. These frameworks are not restricted to a R&D management structure, as they also include a broader management system to promote innovation. Some design principles to performance measure system and correspondent KPIs related to innovation management frameworks were identified in literature, for instance, the ones displayed in Chart 5.

Chart 5. Design principles based on innovation management frameworks

Source: The authors.

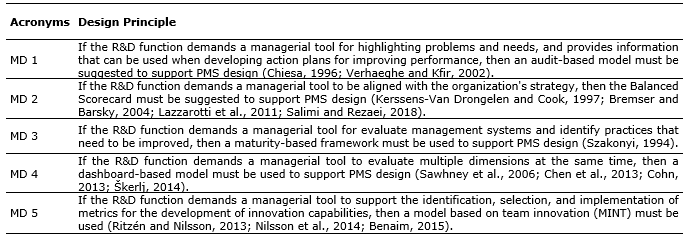

Design Principles to design a PMS model for R&D

In a complex environment, it is reasonable to conceive of a set of performance measures that must be organized in a coordinated and balanced manner, assessing not only whether objectives were met, but also the means by which that was accomplished. The KPIs must not be dispersed in the organization; they must be structured and organized in order to meet the needs of the PMS instead. Some design principles to design a PMS model for R&D were identified in the literature, as shown in Chart 6.

Chart 6. Design principles for a PMS model for R&D

The next section will present a proposal for the articulated usage of Design Principles in order to guide a PMS design for the R&D function.

6 DESIGN PRINCIPLES FOR PMSs IN THE FOURTH-AND-FIFTH GENERATION R&D SYSTEMS

The design principles identified in the literature review suggest a set of general rules to design a PMS for fourth-and-fifth generation R&D systems.

The objectives and scope of PMS must be identified, as suggested by Kerssens-Van Drongelen and Bilderbeek (1999) and Baglieri et al. (2000) in order to define the most appropriated KPIs and management system to support PMS. Secondly, an evaluation model such as Balanced Scorecard (Kaplan and Norton, 1992) or audit model (Chiesa et al., 1996) must be adopted in order to drive KPIs implementation and their use as a managerial tool (Neely, 1998). R&D processes must be identified and modeled (Vernadat, 1996) considering not only the internal activities in the R&D division, but also the relevant ones outside R&D, such as can be viewed in Cordero (1990). Thirdly, modelling R&D processes allows for the definition of inputs, outputs, and outcomes as well as the nature of each process and its supporting tangible and intangible resources (Baglieri et al., 2000). R&D processes must be aligned with the innovation management structure (Adams et al., 2006; Davila et al., 2006; Cohn, 2013) in order to support complex and non-linear interaction between operations and strategy. The latter are present in typical fourth-and-fifth generation R&D systems (Rothwell, 1994). Finally, a set of KPIs must be organized in a coordinated, balanced, and articulated manner, integrating a comprehensive PMS (Neely, 1998; Neely et al., 2000; Lima et al., 2008) to improve innovation and R&D management. The following sections address these issues and articulate them with the design principles identified in the literature.

Defining objectives and scope for a PMS for R&D systems

A PMS project must be initiated with the definition of the evaluation system objectives and the scope of such system as a management tool. Some objectives defined by Kerssens-Van Drongelen and Bilderbeek (1999) and Baglieri et al. (2000) consider the possibility of designing an PMS for strategic control in order to measure R&D impacts (outcome), allow benchmarking in efficiency and output, calibrate the resource allocation, monitor the activities development, and establish managerial bases for motivation / reward, as highlighted by PM 3.

Elements that a contingency PMS designs must also be identified, for instance, the alignment of R&D with the organization's strategy, the level of people’s involvement with the measurement system, and the availability of resources for the project, which was also guided by PM 3.

From such PMS design premises, it becomes necessary to choose the KPIs whose design guidelines are found in PM1 and PM2. These design guidelines define the types of KPIs that will be used, which are: the quantitative and financial KPIs (according to KP 1); and non-financial KPIs, whether qualitative or quantitative (according to KP 2). For instance, if the PMS goal is to evaluate project portfolios, a combination of performance indicators would be more appropriate than adopting a single financial vision (KP 2). If the PMS objective is to promote a benchmark among similar companies, KPIs appropriate for this purpose must be used (such as patent number., ROI, etc.), according to KP 1 and KP 2.

Notably, there is a close relationship between the design principles that orient general PMS issues (such as described in PM 1, PM 2, and PM 3) and the characteristics of R&D and innovation systems that require different approaches to performance evaluation (as presented in KP 1 and KP 2).

Choosing an evaluation model

The Balanced Scorecard (BSC) is a management tool often used by firms as a way of making explicit objectives and KPIs their intended (deliberate) strategies and guide the implementation of action plans that are developed from such strategies. Using the BSC as a managerial tool to implement and monitor R&D strategy becomes a natural consequence, as can be observed in the orientations pointed out in MD 2. In order to deploy a BSC to evaluate R&D performance, some design principles must be considered, such as those that predict the balance and articulation between KPIs (PM 2); the link with the organization objectives (PM 1); and the relationship with the managerial and operational processes (from PA 1 to PA 5).

For the adoption of PMS as an audit (MD 1), it is that the management tool be linked to a benchmark process (which may be internal and / or external), as guided by PM 1, and that multiple evaluation dimensions should be adopted, as directed by IM 5. A variation of this model is based on the evaluation of the multiple dimensions in dashboard format (as directed by MD 4), since such models take ownership of similar conceptual bases (fundamentally of the balance and comprehensiveness in the evaluation process, as pointed out in PM 2 PM 3) to allow monitoring and management.

In order to adopt the model that evaluates maturity of the processes contemplated in the R&D function (MD 3), one must follow the Principles suggested in PM2 and PM3. The only exception lies in the fact that such model does not require balancing and articulation between performance indicators, since the central purpose of its adoption is to identify improvement opportunities.

In general, the choice for these models (MD 1, MD 2, MD 3 or MD 4) depends on aspects of the PMS project; for example, of its implementation objectives (PM 1), the organization's strategic guidelines and available resources (PM 3), and the management structure of the innovation present in the organization (IM 1 to IM 5). These managerial structures are based on business process models that must be known and modeled.

Modeling Business Processes

Vernadat (1996) argues that the processes modeling aims to guarantee a precise understanding and uniform representation of the firm, besides providing support to the organization's project and serving as benchmark for controlling and monitoring their operations. Process modeling thus becomes an important requirement for the design of structured KPIs. The propositions to designing KPIs based on process view are summarized by PA 1 to PA 5.

For typical R&D activities, when a linear and unidirectional process model is adopted, Principles PA 1 and PA 2 may be enough to guide the design of performance indicators. On the other hand, design principles related to the choice of KPIs (KP 1 and KP 2) must be used as a guideline for the evaluation of each process stage, considering the guiding principles of PMS (PM 1 and PM 2).

To ensure the coupling of R&D activities with the organization’s strategic objectives (as mentioned as one of the PM 3 guidelines), it is suggested that the principles organized in PA 3 be adopted. For example, to improve the internal performance of the development process, process indicators related to time and cost must be adopted.

For a project-oriented organization, the PA 4 Principle points to the need of choosing KPIs for each typical step of a R&D project (as directed by KP 1 and KP 2). For instance, regarding the idea generation phase, the adoption of qualitative and subjective indicators is recommended, whereas for the sales phase, financial indicators are more appropriate.

For an organization that has complex interactions in its R&D process (as indicated by PA 5), specific KPIs that assess the effectiveness of these interfaces become necessary. Notably, these KPIs must be qualitative and subjective, as pointed out in KP 2.

In summary, different R&D processes must be known and modeled in order to incorporate specific key performance indicators (such as presented in PA 1 to PA 5). Such KPIs must follow specific (KP 1 and KP 2) and general (PM 1, PM 2, and PM 3) design guidelines in order to fit into R&D management processes. Notably, such KPIs must fit in the innovation structures supported by these processes.

Aligning R&D processes with Innovation processes

The R&D and Innovation processes needed to be supported by an Innovation Management structure, as suggested by Goffin and Mitchell (2005) and Tidd and Thuriaux_Alemán (2016). In the case of a simple firm innovation structure, competing in a low dynamic environment, which only demands incremental improvements in products and services, KPIs must be oriented to technical and commercial levels, within a planning and control approach (such as presented in IM 1).

For hierarchical innovation structures, the use the Design Principle IM 2 is recommended, since it foresees the articulation of different indicators for different hierarchical levels.

For instance, when value creation (the highest degree element in the hierarchy) is concerned, the use of financial indicators (KP 1) is recommended. For integration with business, the use of qualitative indicators (KP 2) specific for project management (KP 4) is preferred.

For project-oriented innovation, the use of different types of KPIs (KP 1 and KP 2) is suggested, according to the novelty degree present in each project (as guided by IM 3). Moreover, aligning the strategic objectives supported by such projects with the strategic goals of the organization (PM 3) is beneficial. For instance, existing projects demand typical benchmarking indicators, while new projects may demand indicators that assess market reaction and behavior.

For more comprehensive and complex innovation structures, different approaches may be used, as highlighted by Principles IM 4 and IM 5. For instance, for innovation systems embedded in the firm’s quality policies. The use of constant Principles of IM 5 is suggested, from the proposals of Jordan et al. (2003) and Adams et al. (2006); while innovation systems that require the linkage of their strategic objectives with organizational resources and capabilities can be evaluated from the guidelines proposed by Muller et al. 2005 (IM 4). For example, a market leadership strategy points out to a strong emphasis on the definition of a solid technological platform in processes and for product development. Some elements presented in a corporate strategy, such as leadership, culture, politics, collaboration between partners, etc., are covered by IM 5. They are particularly relevant in highly dynamic markets, which require frequent changes in the corporation's business models.

Some of these IM principles can be appropriate for service organizations (Den Hertog et al., 2010; Johansson and Smith, 2015) to support open innovation structures (Chersbrough 2003; Erkens et al., 2014) and serve as a grounded start to assessing capability innovation (Björkdahl and Börjesson, 2012; Nilsson and Ritzén, 2014).

Choosing key performance indicators

The proposed set of design principles (which include the definition of purpose and scope, the adoption of the evaluation model, the need for process modelling, and the alignment of such processes with the innovation structure) ultimately converge to the choice of the most appropriate indicators to measure (a more complex) R&D performance. Therefore, a multidimensional perspective, as suggested by Cho (2018), is crucial to dealing with this complexity—a typical characteristic of 4th and 5th R&D generation systems.

In a high-complexity environment, it is mandatory to draw up an effective PMS, which allows one to organize, coordinate, balance, and articulate different indicators associated to the organization's strategy. It thus supports the possibility of the firm to consciously choose between resilience policies and adaptation strategies. Such general design recommendations to PMS may be found, for instance, in Lima et al. (2008).

As to R&D and Innovation management systems, while the Principle Designs PM 1, PM 2, and PM 3 present more comprehensive project guidelines for PMS design, Design Principles KP 1 and KP 2 point out to the specific nature of its KPIs, the ones that must be considered in such PMS. The complexity present in the firm’s Innovation System influences not only the innovation management model to be adopted, but also, in this context, the PMS that must be developed for R&D.

For simple structures, a qualitative and subjective evaluation of the preliminary stages of the research process, linked to a quantitative and objective evaluation of the marketing stages, would be sufficient when designing the R&D’s PMS. As Innovation becomes organic to the firm, more complex and comprehensive conceptual frameworks will require more sophisticated KPIs, as highlighted by IM 4 and IM 5, which guide the articulation of Innovation Systems with different KPIs profiles. The KPIs suggested in the already mentioned bibliographic references create a starting pool of options that is appropriate to complete a design proposition associated with this class of problem.

7 CONCLUSION

That which is not measured cannot be managed. Although there are authors who do not consider R&D performance evaluation truly possible (Schwan, 2016), the present research seeks to find possible ways to allow a clearer perception of how the R&D processes are performing.

A challenge is that the application of the measurement system and the metrics it entails should not be perceived as a bureaucratic exercise that limits or discourages creative time (Chiesa et al., 2009; Saunila, 2014). Such perception indeed undermines the benefits of performance measurement systems. Instead, the measurement system ought to promote insights and behavioral changes that positively affect the firms’ ambidexterity in terms of innovation process and climate (Benaim, 2015). Measuring R&D and Innovation is essential, because an organization has to improve its innovation capability to become competitively innovative, and, further, to truly manage its business in the present times (Saunila and Ukko, 2012).

This paper does not propose a new conceptual framework for R&D evaluation. It does recognize the merit of several conceptual frameworks developed for this purpose that one may find while searching through the literature. In fact, the research presented a general design concept through a set of Design Principles, which the authors hope will help managers develop their own useful and effective performance measurement structures.

The systematic review of literature is a fundamental step of the Design Science Research approach. After all, a deficient identification of the available conceptual principles and frameworks, as well as the lack of knowledge of present design propositions to the problem class at hand, would make it impossible to establish and highlight the Design Principles which may guide the development of a specific new PMS focused on solving the specific needs of a given organization. Thus, the Design Principles, as presented here, bring up a set of guidelines and recommendations that may be useful for the development of new design solutions, or, in practice, for the overall design of a specific PMS.

This paper, however, stops in the beginning of in its own R&D process, and naturally sets its own follow-up agenda. The next step of the current research initiative will be the development and testing of a fully developed Design Proposition for PMS for contemporary Innovation Systems in the field. There, R&D activities are currently internally and externally networked to the firm to a considerable degree, in a way that might be going well beyond Rothwell’s 4th and 5th generation R&D original concept.

For that reason, this research and development effort in Production Engineering will continue to pursue the necessary evolution in its approaches and results in order to address new innovation activities design. Another reason is the development of new tools and metrics for the manager who needs quick and accurate information, as they are necessary for adaptation and change when facing the truly complex competitive environment currently experienced by organizations in all industries.

REFERENCES

Adams, R.; Bessant, J. et al. (2006), "Innovation management measurement: A review”, International Journal of Management Reviews, Vol. 8, Nº 1, pp. 21-47.

ANPEI (2000) Indicadores Empresariais de Inovação Tecnológica, ANPEI - Associação Nacional de Pesquisa e Desenvolvimento das Empresas Inovadoras, São Paulo, Brasil.

Baglieri, E.; Chiesa, V. et al. (2000). “Evaluating Intangible Assets: The Measurement of R&D Performance.” Research Division Working Paper No. 01/49. Available at SSRN: https://ssrn.com/abstract=278260 or http://dx.doi.org/10.2139/ssrn.278260.

Benaim, A. (2015) Innovation Capabilities – Measurement, Assessment and Development, Licentiate thesis of Faculty of Engineering, Department of Design Sciences, Lund university, Sweden.

Björkdahl, J., Börjesson, S. (2012), “Assessing firm capabilities for innovation”. International Journal of Knowledge Management Studies, Vol. 5, Nº 1/2, pp. 171–185

Bøler, E.; Moxnes, A.; Ulltveit-Moe, K. (2015), "R&D, international sourcing, and the joint impact on firm performance”, American Economic Review, Vol. 105, Nº 12, pp. 3704–3739

Boston Consulting Group (2006), Senior Management Survey. The Boston Consulting Group.

Bremser, W.; Barsky, N. (2004). “Utilizing the Balanced Scorecard for R&D Performance Measurement.”, R&D Management, Vol. 34, Nº 3, pp. 229–238

Brown, M.; Svenson, A (1988), “Measuring R&D Productivity: The Ideal System Measures Quality, Quantity and Cost, is Simple, and Emphasizes Evaluation of R&D Outcomes rather than Behaviors.” Research Technology Management, Vol: 41, Nº 6, pp. 30–35.

Bunge, M. (1967). Scientific Research II: The Search for Truth, Springer Verlag, Berlin.

Chesbrough, H. (2003). “The era of open innovation” MIT Sloan Management Review, Vol. 44, Nº. 3, pp. 35-41.

Chen, J.; Sawhney, M.; Neubaum, D. (2013). “An Empirically Validated Framework for Measuring Business Innovation” SSRN Electronic Journal, available from: https://dx.doi.org/10.2139/ssrn.2178114

Chiesa, V. ; F. Frattini, et al. (2007) “How do measurement objectives influence the R&D performance measurement system design? Evidence from a multiple case study”, Management Research News, v.30, n.3, 2007, p.187-202.

Chiesa, V.; Coughlan, P. et al. (1996). “Development of a Technical Innovation Audit.” Journal of Product Innovation Management. Vol. 13, Nº 2, pp. 105–136.

Chiesa, V.; Frattini, F. (2009), Evaluation and Performance Measurement of Research - Techniques And Perspectives For Multi-Level Analyses. Ed. Edward Elgar, Cheltenham, UK.

Chiesa, V.; Lazzarotti, V. et al. (2008). “Designing a Performance Measurement System for the Research Activities: A Reference Framework and an Empirical Study”, Journal of Engineering and Technology Management Vol. 25, pp. 213–226.

Cho, Y. (2018). “Assessing the R&D Effectiveness and Business Performance”, STI Policy Review, Vol. 9, Nº 1, pp. 1-29

Cohn, S. (2013), “A Firm-Level Innovation Management Framework and Assessment Tool for Increasing Competitiveness.” Technology Innovation Management Review, October 2013, pp. 6–15.

Cordero, R. (1990). “The Measurement of Innovation Performance in the Firm: An Overview.” Research Policy, Vol 19, Nº 2, pp. 185–192.

Cruz-Cázaresa, C.; Bayona-Sáezb, C.; García-Marcob, T. (2013). “You can’t manage right what you can’t measure well: technological innovation efficiency”. Research Policy, Vol. 42, No. 6-7, pp. 1239–1250

Davila, T.; Epstein, M.; Shelton, R. (2006), Making Innovation Work: How to Manage It, Measure It, and Profit from It, Wharton School Publishing, New Jersey.

Den Hertog, P.; van der Aa, W.; Jong, M. W. (2010), “Capabilities for managing service innovation: towards a conceptual framework”, Journal of Service Management, Vol 21, No. 4, pp. 490–514.

Dewangan, V.; Godse, M. (2014). “Towards a holistic enterprise innovation performance measurement, system”, Technovation, Vol. 34, No. 9, pp. 536–545.

Dresch, A.; Lacerda, D. P.; Antunes Jr. J. A. V. (2013), Design Science Research: A Method for Science and Technology Advancement, Ed. Springer, Switzerland.

Edison, H.; bin Ali, N.; Torkar R. (2013), “Towards Innovation Measurement in the Software Industry”, Journal of Systems and Software, Vol. 86, No. 5, pp. 1390–1407.

Erkens, M.; Wosch, S.; Luttgens, D. et al. (2014), “Measuring open innovation. A toolkit for successful innovation teams”, Performance, Vol. 6, No. 2, pp. 12-23.

Foster, R. N.; Linden, L. H.; Whiteley, R. L. et al. (1985), “Improving the return in R&D – II”. Research Management, Vol. 28, No. 2., pp. 13-22.

Franceschini, F.; Galetto, M.; Maisano, D. (2007), Management by Measurement: Designing Key Indicators and Performance Measurement Systems, Ed. Springer Science & Business Media.

Frattini, F.; Lazzarotti, V. et al. (2006). “Towards a System of Performance Measures for Research Activities: Nikem Research Case Study”, International Journal of Innovation Management, Vol. 10, No. 4, pp. 425–454.

Galloway, E. (1971), “Evaluating R&D Performance - Keep It Simple”, Research Management, Vol. 14, No. 2, pp. 50-58.

García-Valderrama, T.; Mulero-Mendigorri, E. (2005), “Content Validation of a Measure of R&D Effectiveness”, R&D Management, Vol. 35, No. 3, pp. 311–331.

Gawer, A.; Cusumano, M. (2014). “Industry Platforms and Ecosystem Innovation.” Journal of Product Innovation Management, Vol. 31, No. 3, pp. 417–433.

Goffin, K.; Mitchell, R. (2005), Innovation Management: Strategy and Implementation Using the Pentathlon Framework, Ed. Palgrave Macmillan, United Kington.

Griffin, A.; Page, A. (1993), "An Interim Report on Measuring Product Development Success and Failure”, Journal of Product Innovation Management, Vol. 10, pp. 291-308.

Griffin, A.; Page, A. (1996), "PDMA Success Measurement Project: Recommended Measures for Product Development Success and Failure”, Journal of Product Innovation Management, Vol. 13, No. 6, pp. 478–496.

Hansen, M.; Birkinshaw, J. (2007), “The Innovation Value-Chain.” Harvard Business Review, Vol. 85, No. 6, pp. 121–130.

Hauser, J.; Zettelmeyer, F. (1996), “Metrics to Evaluate R,D&E”, Research-Technology Management, Vol. 40, No. 4, pp. 32–38.

Jensen, P.; Webster, E. (2004), “Examining Biases in Measures of Firm Innovation”, paper presented in Dynamics of Industry and Innovation: Organizations, Networks and Systems, Copenhagen, Denmark, June 27-29, 2005

Johansson, A.; Smith, E. (2015), Innovation in Service Organizations – The development of a suitable innovation measurement system, Master’s Thesis, Department of Business Studies, Uppsala University, Sweden.

Jordan, G.; Streit, L.; Binkley. J. S. (2003), “Assessing and improving the effectiveness of national research laboratories”, IEEE Transactions on Engineering Management, Vol. 50, No. 2, pp. 228–235.

Kallman, K. (2009), Innovation Metrics – keys to increase competitiveness, Master’s Thesis Within International Business in Stockholm School of Economics.

Kaplan, R.; Norton, D. (1992), “The balanced scorecard – measures that drive performance”, Harvard Business Review, Vol. 70, No. 1, January-February, pp. 71-79.

Kaydos, W. J. (1991), Measuring, Managing, and Maximizing Performance: What Every Manager Needs to Know About Quality and Productivity to Make Real Improvements in Performance, Ed. Productivity Press.

Kerssens-Van Drongelen, I.; Nixon, B.; Pearson, A. (2000), “Performance measurement in Industrial R&D”, International Journal of Management Reviews, Vol. 2, No. 2, pp. 111-143.

Kerssens-Van Drongelen, I.; Bilderbeek, J. (1999), “R&D Performance Measure - More than Choosing a set of Metrics”, R&D Management, Vol. 29, No. 1, pp. 35-46.

Kerssens-van Drongelen, I.; Cooke, A. (1997), “Design Principles for the Development of Measurement Systems for Research and Development Processes.”, R&D Management, Vol. 27, No. 4, pp. 345–357.

Lazzarotti, V.; Manzini, R.; Mari, L. (2011), "A model for R&D performance measurement” International Journal of Production Economics. Vol. 134, No. 1, pp. 212–223.

Lima, E.; Costa, S.; Angelis, J. (2008), “Framing Operations and Performance Strategic Management System Design Process”, Brazilian Journal of Operations & Production Management, Vol. 5, No. 1, pp. 23-46.

Lopes, A.; Kissimoto, K.; Salerno, M. et al. (2016), “Innovation Management: a Systematic Literature Analysis of the Innovation Management Evolution”, Brazilian Journal of Operations & Production Management, Vol. 13, No. 1, pp. 16-30.

McGrath, M. E.; Romeri, M. N. (1994), “The R&D Effectiveness Index: A Metric for Product Development Performance World Class Design to Manufacture”, Journal of Product Innovation, Vol. 1, No. 4, pp. 24-31.

Milbergs, E.; Vonortas, N. (2005) “Innovation Metrics: Measurement to Insight”. White Paper of National Innovation Initiative, 21st Century Innovation Working Group. IBM Corporation, 2005.

Moser, M. (1985) “Measuring Performance in R&D Settings”, Research Management, Vol. 28, No. 5, pp. 31-33.

Muller, A.; Välikangas, L.; Merlyn, P. (2005), “Metrics for innovation: guidelines for developing a customized suite of innovation metrics”, Strategy & Leadership, Vol 33, No. 1, pp. 37–45.

Neely, A. (1998), Measuring Business Performance: Why, What and How. Economist Books, London.

Neely, A. (1999), “The performance measurement revolution: why now and what next?” International Journal of Operations & Production Management, Vol. 19, No. 2, pp. 205-228.

Neely, A.; Mills, J.; Platts, K. et al. (2000), “Performance measurement system design: developing and testing a process-based approach”, International Journal of Operations & Production Management, Vol. 20, No. 10, pp. 1119-1145.

Nilsson, S.; Ritzén, S. (2014), “Exploring the Use of Innovation Performance Measurement to Build Innovation Capability in a Medical Device Company”, Creativity and Innovation Management, Vol. 23, No. 2, pp. 183–198.

Nilsson, S.; Wallin, J.; Benaim, A. et al. (2013), Re-Thinking Innovation Measurement to Manage Innovation Related Dichotomies in Practice, paper presented in 13th International CINet Conference - Continuous Innovation Network Conference, Rome, Italy, 2012.

Oliveira, A. (2010). Uma Avaliação de Sistemas de Medição de Desempenho para P&D Implantados em Empresas Brasileiras frente aos Princípios de Construção Identificados Na Literatura. Tese de Doutorado em Engenharia de Produção, Universidade Federal do Rio de Janeiro.

Ojanen, V.; Vuola, O. (2006), “Coping with the multiple dimensions of R&D performance analysis”, International Journal of Technology Management, Vol. 33, No. 2-3, pp. 279-290.

Pappas, R. A.; Remer, D. S. (1985), “Measuring R&D Productivity”, Research Technology Management, Vol. 28, No. 3, pp. 15–22.

Parmenter, D. (2010), Key Performance Indicators, Developing Implementing and Using Winning KPIs, 2nd ed, Ed. John Wiley and Sons.

Pereira, G.; Santos, A.; Cleto, M. (2018), “Industry 4.0: glitter or gold? A systematic review”, Brazilian Journal of Operations & Production Management, Vol. 15, No. 2, pp. 247-253.

Petticrew, M.; Roberts, H. (2006). Systematic Reviews in the Social Sciences: A Practical Guide, Blackwell Publishing Professional.

Ritzén, S.; Nilsson, S. (2013). “Designing and implementing a method to build Innovation Capability in product development teams”. Presented at the International Conference on Engineering Design, pp. 1–5.

Romme, A.; Endenburg, G. (2006). “Constructing Principles and Design Rules in the Case of Circular Design”, Organization Science, Vol. 17, No. 2, pp. 287–297.

Rothwell, R. (1994). “Towards the Fifth-Generation Innovation Process”, International Marketing Review, Vol. 11, No. 1, pp. 7–31.

Salerno, M. (2009). “Reconfigurable Organization to Cope with Unpredictable Goals.”, International Journal of Production Economics, Vol. 122, Nº 1, pp. 419–28.

Salimi, N.; Rezaei, J. (2018), “Evaluating firms’ R&D performance using best worst method”, Evaluation and Program Planning, No. 66, pp. 147–155.

Saunila, M. (2014), Performance Management through Innovation Capability in SME. PhD thesis, Finland.

Saunila, M.; Ukko, J. (2012), “A conceptual framework for the measurement of innovation capability and its effects” Baltic Journal of Management, Vol. 7, No. 4, pp. 355-375.

Sawhney, M.; Wolcott, R. C.; Arroniz, I. (2006), “The 12 Different Ways for Companies to Innovate”, IEEE Engineering Management Review, Vol. 35, No. 1, pp. 1–18.

Schumann, P. A.; Ransley, D. L.; Prestwood, D. C. L. (1995), “Measuring R&D performance”, Research Technology Management, Vol. 38, No. 3, pp. 45-54.

Schwan, S. (2016), “Organizing for Breakthrough Innovation.” McKinsey Quarterly, Jan. 2016.

Simon, H. (1969), The Sciences of the Artificial, 3rd ed, The MIT Press.

Škerlj, T (2014), "Measuring Innovation Excellence: Measurement Framework for PWC’s Wheel of Innovation Excellence Concept," Proceedings of the Management, Knowledge and Learning International Conference, Slovenia, 2014.

Szakonyi, R. (1994), “Measuring R&D effectiveness – I”, Research Technology Management, Vol. 37, No. 2, pp. 27-32.

Tidd, J.; Bessant, J. et al., (2005), Managing Innovation: Integrating Technological, Market and Organizational Change, 3rd edition, Willey, England.

Tidd, J.; Thuriaux-Alemán, B (2016), “Innovation Management Practices: Cross-Sectorial Adoption, Variation, and Effectiveness.” R&D Management Vol. 46, S3, pp. 1024-1043.

Tipping, J. W.; Zeffren, E.; Fusfeld, A. R. (1995). “Assessing the Value of Your Technology.” Research Technology Management, Vol. 38, No. 5, pp. 22–39.

Van Aken, J. (2004), “Management Research Based on the Paradigm of the Design Sciences: The Quest for Field-Tested and Grounded Technological Rules.” Journal of Management Studies, Vol. 41, No. 2, pp. 219-246.

Van Aken, J. (2013). “Design Science: Valid Knowledge for Socio-technical System Design” Communications in Computer and Information Science, Vol. 388, pp. 1–13.

Verhaeghe, A.; Kfir, R. (2002), “Managing innovation in a knowledge intensive technology organisation (KITO)”, R&D Management, Vol. 32, No. 5, pp. 409-417.

Vernadat, F. (1996), Enterprise Modeling and Integration: Principles and Applications, Ed. Chapman & Hall, Londres.

Werner, B.; Souder, W, (1997), “Measuring R&D performance - state of the art”, Research Technology Management, Vol. 40, No. 2, p.34-42

Zedtwitz, M.; Friesike, S.; Gassmann, O. (2014), “Managing R&D and New Product Development” in Dogson, M.; Gann, D.; Phillips, N. (Ed), The Oxford Handbook of Innovation Management, Oxford University Press.

Received: 27 Feb 2018

Approved: 16 Apr 2019

DOI: 10.14488/BJOPM.2019.v16.n2.a6

How to cite: Oliveira, A. R.; Proença, A. (2019), “Design principles for research & development performance measurement systems: a systematic literature review”, Brazilian Journal of Operations & Production Management, Vol. 16, No. 2, pp. 227-240, available from: https://bjopm.emnuvens.com.br/bjopm/article/view/787 (access year month day).